Dr. Ehtesham Hasan

Funded By: - Kuwait Foundation for Advancement of Science (KFAS)

Funding Amount: - KD 8000

Year: - 2018

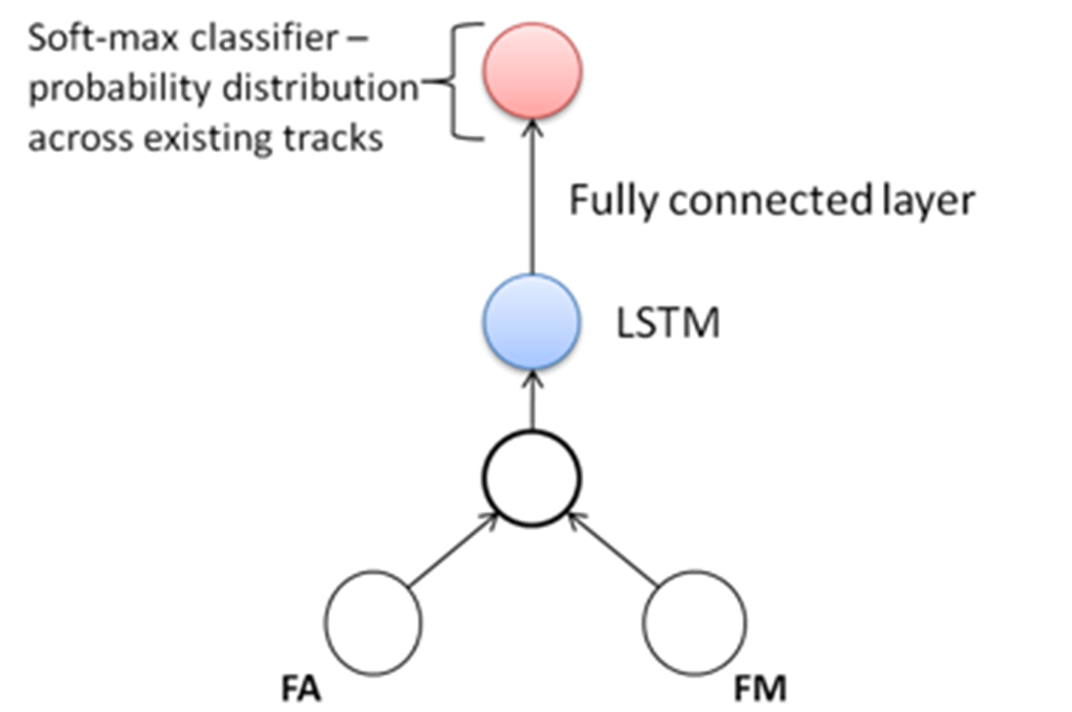

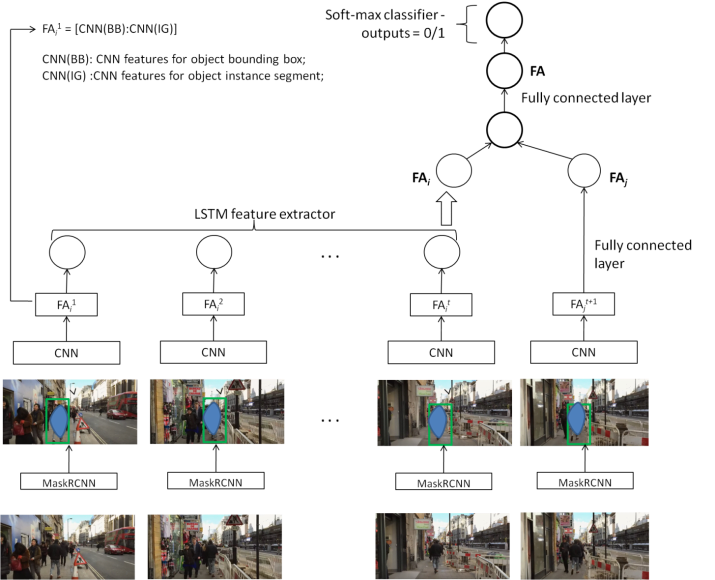

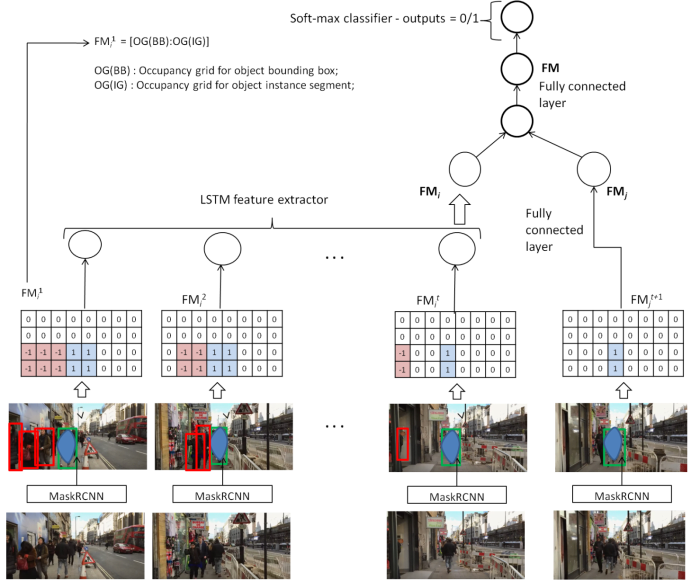

Summary: - TWe present a novel tracking solution using recurrent neural networks to model complex temporal dynamics between objects irrespective of appearances, pose, occlusions and illumination. We follow tracking-by-detection methodology using hierarchical long short term memory (LSTM) network structure for modeling the motion dynamics between objects by learning the fusion of appearance and motion cues. The target motion model is learned on object instances detected by maskRCNN detector, and represented by the appearance and motion cues. A novel motion coding scheme is designed to anchor the LSTM structure to effectively model the motion and relative position between objects in single representation scheme. The proposed motion and appearance features are fused in an embedded space learned by the hierarchical LSTM structure for predicting the object to track association.

RNN architecture for appearance feature modeling

RNN architecture for motion feature modeling