Professor M Murugappan

Funded By: - Kuwait Foundation for Advancement of Science (KFAS)

Funding Amount: - KD 4500

Year: - 2019

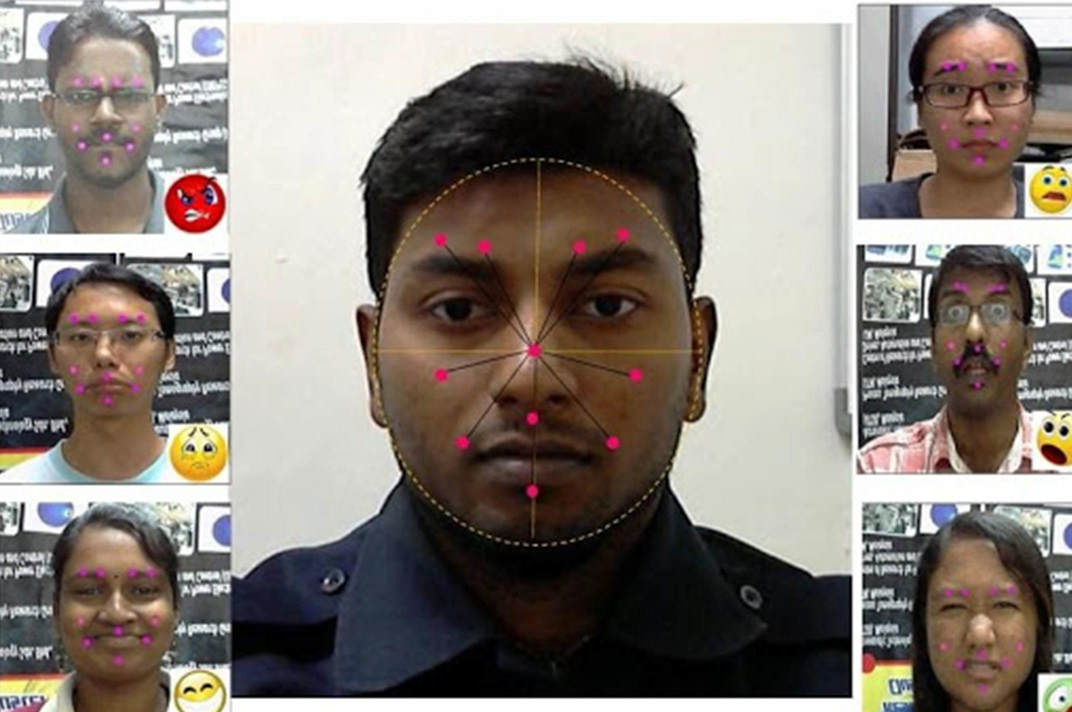

Summary: - Emotion recognition is one of the important methods used for understanding human affective behavior, and it will be incredibly useful for improving personal communication and communication between humans to machines. It also plays a significant role in developing intelligent HUMANOID systems and a clinical diagnosis system for assessing the emotional behavior of different clinical disorders such as bipolar disorders, anxiety, depression, etc. Identifying different types of emotional expressions from the subject's face is a simple approach compared to other modalities, and it is essential for developing intelligent systems for a variety of applications. However, this method of emotion detection has not revealed the original or internal feelings of the subjects and can be easily masked by the subjects. Hence, we aim to analyze the facial expressions of the subjects with their brain electrical activities through Electroencephalogram (EEG) signals for developing a more robust real-time emotion recognition system. We have performed studies on healthy control subjects to assess their facial emotional expressions through virtual markers and brain electrical activities through EEG signals for detecting six different emotions (happiness, anger, fear, sadness, surprise, and disgust). We found that EEG signals give more reliable output in detecting the subject's emotions with higher accuracy than facial expressions using virtual makers. We are investigating ways to extract meaningful information from EEG signals over different frequency ranges (alpha, beta, gamma bands) and a limited number of virtual markers to track the emotional state changes in the subjects effectively. We expect this study to generate valuable information on understanding the relationship between facial expressions with brain electrical activities for a more robust affective state assessment system using computational methods.